Interview with Aspaara Algorithmic Solutions AG

Could you shortly tell us what Aspaara is?

Employees are the most influential production factor and — at the same time — the largest cost factor in most companies. Therefore, it is important to make best use of the skills in-house. Our artificial intelligence-based optimization engine, the Aspaara® MatchingCore®, identifies hidden internal potential within the company and satisfies customized scheduling criteria while adapting to our clients’ specific situations. Our optimization engine ensures that the right people are in the right place at the right time. We make sure that the final result is optimal for our client’s specific needs. Through sophisticated analyses, accurate predictions and optimizations, MatchingCore® is specifically aimed for recurrent, long-term time allocation of the team, and it complements and helps internal operations.

What is Aspaara’s background story?

Founders Alexander Grimm, physicist with a PhD in Business Administration and Kevin Zemmer, PhD in Applied Maths, met at the ETH Zürich. The history of Aspaara Algorithmic Solutions AG began more than five years ago with the Sola-Match project. We attended as runners in the Sola-Stafette. The challenge there, we noticed, was to put together a team of 14 different runners. We created a platform that matched runners with teams that still had capacity for additional participants. We realized that this type of calculation of runners’ skills, connecting them to the right team, could be further developed within a professional team in a company. This is how the business idea was established. From there we moved on to tutoring schools and then aircraft ground handling was added relatively quickly. We make allocation solutions for companies in the professional service sector, such as PwC, but also for logistics and railway companies.

Why is it important that Aspaara exists?

We have been able to save up to 6% of wrongly allocated labor costs for our clients and up to a quarter of travel time reduction. We make sure that employees’ preferences are respected and that the best teams work together. Moreover, we ensure that all persons involved are satisfied, and therefore we have been able to achieve sustainable success with our customers. We accompany our customers over a long period of time.

Who can profit from your services?

Staffing is complex because of a variety of constraints. Our precise and predictive decisions enable our clients to automate planning processes, reduce failure rates in staffing, while increasing reliability and efficiency. Our customer-base is very varied. We work with professional service companies where we focus in particular on assurance and auditing, we have clients in the transportation sector, such as aircraft ground handlers, further we have clients in logistics companies. Our clients use the MatchingCore® for their allocation and planning of long-term jobs – some companies use it once a year to optimize their staffing as well as when there is a turnover or a new client. Others, for example ground handlers, have more recurrent needs and use it more frequently. The clients use the MatchingCore® continuously and independently as “Software-as-a-Service”. Our customers typically have at least 350 permanent employees (internal and external).

Your client-base is very varied; can you give some examples of your projects?

At PwC Switzerland we conceived, implemented and deployed the Aspaara MatchingCore® for internal staff, matching operations for all of their 14 offices. While respecting all kinds of optimization criteria our MatchingCore® aspires towards the minimization of travel costs. We also seek to increase employment satisfaction, looking at satisfactory career paths. We find the best matches to increase team continuity.

At Zurich Airport, Aspaara® Groundcloud® uncovered how to save over 5% of wrongly allocated wage costs while increasing process reliability for customer airlines for all air and land-side operations of a ground handling company.

What are your biggest challenges?

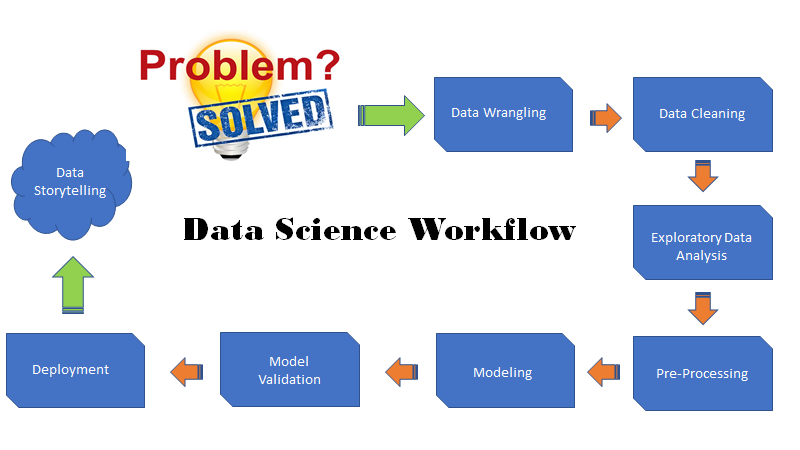

What is special for us is that we offer services that are individually developed for each client’s specific need. MatchingCore® adapts to the very individual planning challenges of each client with the help of artificial intelligence and machine learning – which takes some weeks.

Currently, we work hard on bringing the learning cycles for the customization down to a few days. This way we will be able to offer a fully customized plan for our clients within a few days – which a technical challenge. But we love challenges!

How do you see the future of Aspaara and what is your long-term goal?

In the short-term we would like to help our existing customers, as well as those who would like to become our customers, with a planning optimization to help them out of the current Covid-19 situation and strengthen them beyond this.

Our long-term goal is to become the best and most innovative provider of customized resource planning software in Europe.

Use Case Talks Series

Three times a year we organize the Use Case Talks series, on behalf of the Swiss Alliance for Data Intensive Services. At these events we discuss among experts about Artificial Intelligence. We are joined by about one third industrial, one third academic and one third individual members. The next Use Case Talk will take place the 2nd of November – save the date and contact us if you are interested in participating! Find out more here.